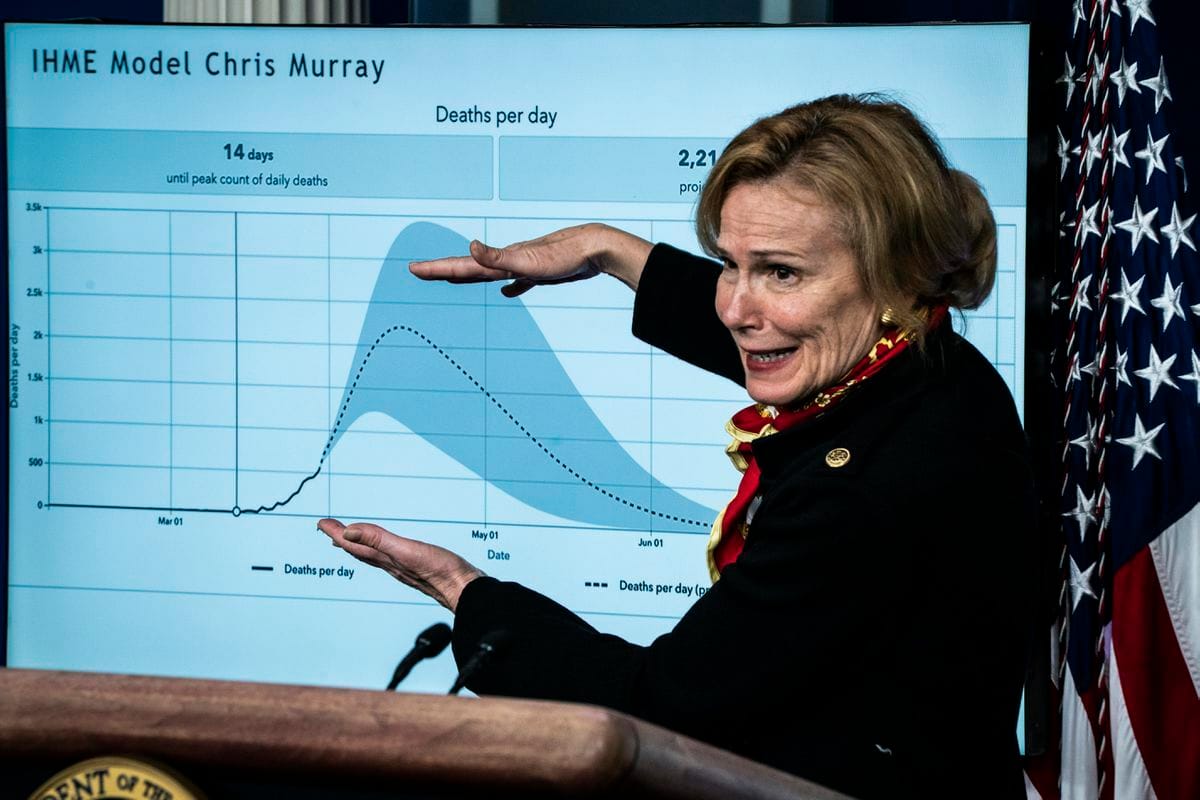

Most political elites in the United States right now seem to be invoking one epidemiological model from the Institute for Health Metrics and Evaluation (IHME) at the University of Washington.

Deborah Birx, leader of the White House coronavirus team, referring to the IHME forecasts.

Apparently there is a more complicated story about the forecasting models that have been considered by the US government task force, but elite messaging at this moment seems clearly converged on a model that sees roughly April 15th as the peak of destruction. And the main data source for that seems to be the graph above. A regularly updated version is available here.

As I write this, many people are attacking the projections for over-estimating the severity.

In this post, I want to articulate a few reasons for fearing that the model is under-estimating the severity. At the time I’m writing this, I honestly don’t know how much confidence to have in these concerns, so I want to articulate them and let others be the judge…

The IHME has a history of opaque and incorrect measurement

A 2018 article in BioMed Research International analyzed the IHME’s methodology in some of their past efforts. This article really, really does not inspire confidence…

Apparently the IHME is known for using opaque methods and refusing to share information in response to inquiries — a cardinal sin and huge red flag in scientific research of any kind that’s not private sector.

IHME reported 817,000 deaths between the ages of 5 and 15… In fact, when we look at the UN report data, the deaths are 164 million.

Did anyone else have to read that twice? This kind of discrepancy really makes you wonder what exactly is going on under the hood. But let’s give ‘em a benefit of the doubt and carry on…

Even more alarming to me is this:

IHME's methodology for measuring burden of disease has an unclear stage called “black box step.” In particular, only the Bayesian metaregression analysis and DisMod-MR were used to explain the YLD measurement method that should estimate the morbidities and the patients, but no specific method is described [2]. WHO requested sharing of data processing methods, but was informed of the inability to do so. For this, WHO researches were recommended to avoid collaborative work with IHME [8].

Does anyone else find this extremely troubling? I would really like to learn what possible reason the IHME — presumably a recipient of public funding — would have for declining to share methodological details. It is utterly insane to me that the US government would predicate its public forecasting on an insititution that won’t even disclose basic methodological details.

The model assumes complete social distancing and, um, have you talked to your Grandfather on the phone lately?

Am I missing something? It seems obvious that America is not anywhere near “complete” social distancing. I talk on the phone with my family in NJ, the most badly hit state other than New York, and the vibe throughout my large working-class-to-middle-class family milieu is surprisingly nonchalant. I think most Americans are vaguely going along with public directions by now, but I really don’t see the average American taking it as seriously as would be needed to motivate completely rigorous social distancing.

So then the question becomes, how sensitive is the model to the social distancing assumption? Well, we are not allowed to know for some mysterious reason. So let’s try some some stupidly crude back-of-the-napkin calculations. Deborah Birx said if there is zero social distancing, we would expect between 1.5 and 2.2 million deaths (presumably based on the Imperial model). If there is complete social distancing, we expect 100,000 and 240,000 deaths (based on the IHME).

Extrapolating from that, if you think Americans are doing social-distancing at a 50% level of rigor, then just split the difference: About 1 million deaths.

The IHME model assumes every state has stay-at-home orders

It’s a simple fact that some states still don’t have stay-at-home orders. How are these people using models with assumptions that are observably inconsistent with reality? Again, the whole thing just smells rotten, which is more troubling than any particular quantitative quibble.

The IHME model assumes every US state is responding like China did (?!?!)

“It’s a valuable tool, providing updated state-by-state projections, but it is inherently optimistic because it assumes that all states respond as swiftly as China,” said Dean, a biostatistician at University of Florida.

How is this real?

But there are still more reasons to fear this model is underestimating the coming destruction…

Americans are less likely to go to doctors and hospitals

Americans face higher out-of-pocket costs for their medical care than citizens of almost any other country, and research shows people forgo care they need, including for serious conditions, because of the cost barriers… in 2019, 33 percent of Americans said they put off treatment for a medical condition because of the cost; 25 percent said they postponed care for a serious condition. A 2018 study found that even women with breast cancer — a life-threatening diagnosis — would delay care because of the high deductibles on their insurance plan, even for basic services like imaging. (Vox)

This is important for two reasons.

First, it means that Americans are probably less likely to seek testing, and if the model presumably uses testing data as input, then the model will underestimate the problem.

But second, it means that Americans, on average, may go to doctors/hospitals later in the process of virus onset than the citizens of other countries. This actually has two implications: It would mean the model is underestimating the coming destruction because sick Americans are still hiding at home, but also the longer-term fatality rate may be higher than projected because Americans are less likely to seek and receive early-stage care that could save them.

Practical decisions should never be made on the basis of one model, anyway

Even if the model is the best possible model in the world, all statistical models are intrinsically characterized by what is called model uncertainty. You just never really know if you’re using the right model! All applied statisticians know this. For this reason, much applied data science leverages what are called ensemble methods. You run many models, and combine them in some way, if only averaging out their predictions.

So yea, who knows what the optimal forecast is, but personally I will wager that the worst day of deaths will see more deaths than the IHME point estimates predict.

If you think I’m missing anything, I would love to hear what.

And while I’m at it, why not throw out a numerical prediction just to hold myself accountable later? Personally my guess is that we will exceed 500,000 deaths, based on the reasoning above. I am not highly confident given the obviously informal nature of my reasoning, but I would bet a modest amount of money that the IHME model is under-predicting the coming destruction. I hope I’m wrong.